Linguistic quality is one of the persistent puzzles in our industry, as it is such an elusive concept. It doesn’t have to be, though. But if only quantity matters to you, you are on your way to ruining your company’s linguistic assets.

Because terminology management is not an end in itself, let’s start with the quality objective that users of a prescriptive terminology database are after. Most users access terminological data for support with monolingual, multilingual, manual or automated authoring processes. The outcomes of these processes are texts of some nature. The ultimate quality goal that terminology management supports with regard to these texts could be defined as “the text must contain correct terms used consistently.” In fact, Sue Ellen Wright “concludes that the terminology that makes up the text comprises that aspect of the text that poses the greatest risk for failure.” (Handbook of Terminology Management)

Because terminology management is not an end in itself, let’s start with the quality objective that users of a prescriptive terminology database are after. Most users access terminological data for support with monolingual, multilingual, manual or automated authoring processes. The outcomes of these processes are texts of some nature. The ultimate quality goal that terminology management supports with regard to these texts could be defined as “the text must contain correct terms used consistently.” In fact, Sue Ellen Wright “concludes that the terminology that makes up the text comprises that aspect of the text that poses the greatest risk for failure.” (Handbook of Terminology Management)

In order to get to this quality goal, other quality goals must precede it. For one, the database must contain correct terminological entries; and second, there must be integrity between the different entries, i.e. entries in the database must not contradict each other.

In order to attain these two goals, others must be met in their turn: The data values within the entries must contain correct information. And the entries must be complete, i.e. no mandatory data is missing. I call this the mandate to release only correct and complete entries (of course, a prescriptive database may contain pre-released entries that don’t meet these criteria yet).

Let’s see what that means for terminologists who are responsible for setting up, approving or releasing a correct and complete entry. They need to be able to:

- Do research.

- Transfer the result of the research into the data categories correctly.

- Assure integrity between entries.

- Approve only entries that have all the mandatory data.

- Fill in an optional data category, when necessary.

Let’s leave aside for a moment that we are all human and that we will botch the occasional entry. Can you imagine if instead of doing the above, terminologists were told not to worry about quality? From now on, they would:

- Stop at 50% research or don’t validate the data already present in the entry.

- Fill in only some of the mandatory fields.

- Choose the entry language randomly.

- Add three or four different designations to the Term field.

- ….

Do you think that we could meet our number 1 goal of correct and consistent terminology in texts? No. Instead a text in the source language would contain inconsistencies, spelling variations, and probably errors. Translations performed by translators would contain the same, possibly worse problems. Machine translations would be consistent, but they would consistently contain multiple target terms for one source term, etc. The translation memory would propagate issues to other texts within the same product, the next version of the product, to texts for other products, and so on. Some writers and translators would not use the terminology database anymore, which means that fewer errors are challenged and fixed. Others would argue that they must use the database; after all, it is prescriptive.

Do you think that we could meet our number 1 goal of correct and consistent terminology in texts? No. Instead a text in the source language would contain inconsistencies, spelling variations, and probably errors. Translations performed by translators would contain the same, possibly worse problems. Machine translations would be consistent, but they would consistently contain multiple target terms for one source term, etc. The translation memory would propagate issues to other texts within the same product, the next version of the product, to texts for other products, and so on. Some writers and translators would not use the terminology database anymore, which means that fewer errors are challenged and fixed. Others would argue that they must use the database; after all, it is prescriptive.

Unreliable entries are poison in the system. With a lax attitude towards quality, you can do more harm than good. Does that mean that you have to invest hours and hours in your entries? Absolutely not. We’ll get to some measures in a later posting. But if you can’t afford correct and complete entries, don’t waste your money on terminology management.

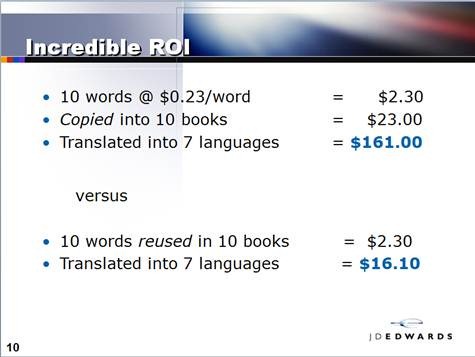

Ben’s argument for single-sourcing was and is simple: Write it once, reuse it multiple times; translate it once, reuse the translated chunk multiple times.

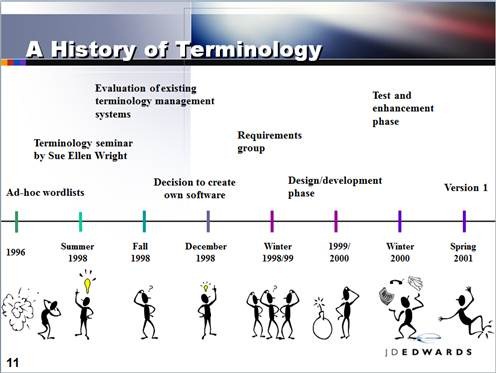

Ben’s argument for single-sourcing was and is simple: Write it once, reuse it multiple times; translate it once, reuse the translated chunk multiple times. At that time, the J.D. Edwards’ terminology team and project was in its infancy. In fact, the TMS was just about to go live, as the timeline presented in Antwerp shows.

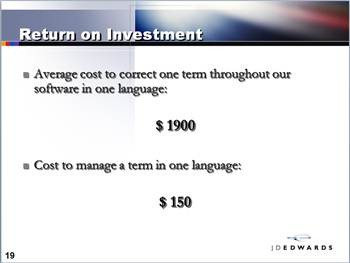

At that time, the J.D. Edwards’ terminology team and project was in its infancy. In fact, the TMS was just about to go live, as the timeline presented in Antwerp shows. The average time that it took to create one entry in the terminology database had already been measured. At that early time in the project, it cost $150 per terminological entry.

The average time that it took to create one entry in the terminology database had already been measured. At that early time in the project, it cost $150 per terminological entry.