One of the main reasons of having a concept-oriented terminology database is that we can set up one definition to represent the concept and can then attach all its designations, including all equivalents in the target language. It helps save cost, drive standardization and increase usability. Doublettes offset these benefits.

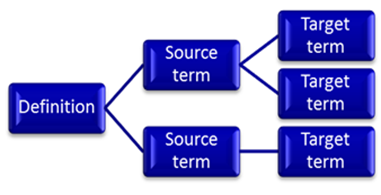

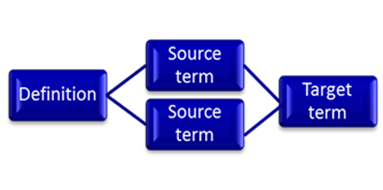

The below diagrams are simplifications, of course, but they explain visually why concept orientation is necessary when you are dealing with more than one language in a database. To explain it briefly: once the concept is established through a definition and other concept-related metadata, source and target designators can be researched and documented. Sometimes this research will result in multiple target equivalents when there was only one source designator; sometimes it is just the opposite, where, say, the source languages uses a long and a short form, but the target language only has a long form.

If you had doublettes in your database it not only means that the concept research happened twice and, to a certain level, unsuccessfully. But it also means that designators have to be researched twice and their respective metadata has to be documented twice. The more languages there are, the more expensive that becomes. Rather than having, say, a German terminologist research the concept denoted by automated teller machine, ATM and electronic cash machine, cash machine, etc. two or more times, research takes place once and the German equivalent Bankautomat is attached as equivalent potentially as equivalent for all English synonyms.

Doublettes also make it more difficult to work towards standardized terminology. When you set up a terminological entry including the metadata to guide the consumer of the terminological data in usage, standardization happens even if there are multiple synonyms. Because they are all in one record, the user has, e.g. usage, product, or version information to choose the applicable term for their context. But it is also harder to use, because the reader has to compare two entries to find the guidance.

And lastly, if that information is in two records, it might be harder to discover. Depending on the search functionality, the designator and the language of the designator, the doublettes might display in one search. But chances are that only one is found and taken for the only record on the concept. With increasing data volumes more doublettes will happen, but retrievability is a critical part of usability. And without usability, standardization is even less likely and even more money was wasted.

Enforce mandatory data when a terminologist releases (approves or fails) an entry. If you decided that five out of your ten DCs are mandatory, let the tool help terminologists by not letting them get away with a shortcut or an oversight.

Enforce mandatory data when a terminologist releases (approves or fails) an entry. If you decided that five out of your ten DCs are mandatory, let the tool help terminologists by not letting them get away with a shortcut or an oversight.